Additional Information

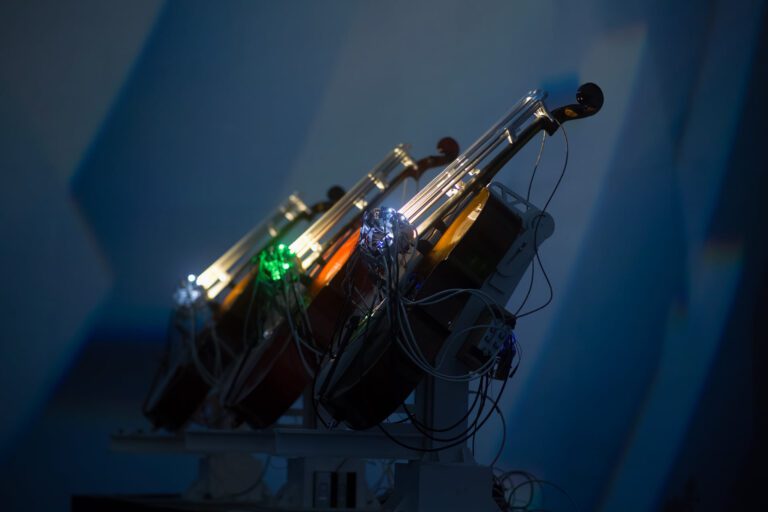

In many fields, artificial intelligence can be seen as a threat to a major part of the professions that may soon be more and more easily replaced by robots. For better or for worse… With this guideline on the horizon, many see a dystopian future with no way back, while some still manage to see it as a new playground for exploration, both technically and metaphysically. Presented at the SAT for the second time, the Empty Vessels project by David Gardener (Montreal Life Support) and Greg Debicki (Woulg), combines music, robotics, and artificial intelligence in an innovative way, to see how a robotic AI could inhabit an experimental cello concerto by itself. Developed from the latest research in the field, a network of artificial neurons is connected in symbiosis with the cello through four rotating bows, which constitute the visible luminous heart of the machine. Even if the machine manages to occupy the space and replace the human on stage, Empty Vessels has the potential to transform the frightening vision of the self-destructive and inhuman nihilistic void into a fertile and organic meditation on emptiness. Through lines of code the performers finally manage to hide, within their empty vessels, the sincere memories of a child learning, naively and sometimes clumsily, to master his new instrument.

PAN M 360: David, you do light, sound, and kinetic sculptures with your Montreal Life Support project. Would you like to talk about your background?

Montreal Life Support: I grew up in the UK and I worked as a design engineer for experiential architecture companies and that’s basically where I developed my skillset in terms of electronics and mechanical engineering. I then established my own practice and I started building products for myself as a visual artist and designer. I moved to Montreal about five years ago and I renamed myself Montreal Life Support. It’s a reference about culture dying in the UK for some time now, big businesses are pushing all the artists out of London. Montreal is my life support because there is so much culture and DIY venues here.

PAN M 360: What about you Greg? You are the one behind the algorithm programming. Would you like to share your background too?

Woulg: I studied new media art at Alberta University of the Arts. I was interested in making interactive installations or really long format performances, of 12 hours or longer for example. From there, I got interested in creative coding and that sort of thing. When I left school, I realized that the art I was interested in was way too expensive for a normal person’s budget. But I kept making music and that’s where most of my energy went for a long time. I make lots of plugins and interfaces for my music performances. That’s where the art programming came from. I have a big interest in generative music, in AI and especially the neural network coming from the new wave of AI research.

PAN M 360: Would you like to talk a bit about how you developed the project?

Montreal Life Support: I was designing and building the robots to be able to play the cellos. The cellos are built for human bodies, they are very curvy, there is no flat surface, and there is nothing to attach anything to. A lot of the development was working out a way that you can build a robot that can interface with an organic form. Most robots work on two or three axes in a linear way. This one had to be built completely from scratch to fit the cello. It’s still in development, it’s version 2.5 now.

Woulg: There are AI for generating classical, jazz, or pop melodies. We really wanted to try to make something that felt more like electronic experimental music. For generating that, there is no open-source for electronic experimental cello music (laugh). We generated a bunch of writing and chord progressions that we liked so that we could train the AI on things that were to our taste.

PAN M 360: Do one of you know how to actually play the cello?

Montreal Life Support: I grew up playing the cello. It sets it apart from other instruments because you can play infinite notes. In that respect, it is very organic. The knowledge on how to play helped the robot develop its full sound.

PAN M 360: One of the first questions that come to mind when we see the show is where is the bow? According to you, does it start at the software or is it at the end of the robotic structure? Or is the whole robotic AI considered as the bow?

Montreal Life Support: Physically, there is a small rotating bow on each string. The reason for doing that is to play the four strings at the same time. With a normal cello, you can play one string or two at a time. So we wanted to play as many notes as we wanted. You can hear the loop of the circle.

Woulg: It reminds me of the mellotron. There is a certain quality to the loop of the bow turning. In a certain way, it opens new and different possibilities to play the cello and it closes the possibilities of a normal cello at the same time.

Montreal Life Support: The title Empty Vessels refers to the absence of human presence. Removing the bow hits home to this emptiness of humanity. You are just left with the instrument.

Woulg: So the bow is missing…

PAN M 360: For now, you can hear the stop-start mechanical rhythm of the robotic AI. How do you feel about it?

Montreal Life Support: The goal is that it matches the fluid sound of a human playing. The bows are made of plastic, you can hear it in the sound as well. It makes the sound more aggressive. The end goal is to play beautiful string music.

Woulg: In this version, we liked those kinds of stop-start mechanical sounds. Conceptually, that’s an important part of the piece. Sometimes, there is that kind of scraping that makes me think of futurism in music where they had the sounds of trains and scraping metal.

PAN M 360: How autonomous is the robotic AI so far?

Woulg: Right now, it’s not autonomous. It can generate music, chord progressions, and rhythms. The fun part of working with AI is that in a fraction of seconds you have 10 000 hours of music. The hard part is finding the good bits. Learning about neural networks and what is possible changed the music we ended up with, in the project. We are not at the stage where we can press a button and it plays forever. Hopefully, we’ll have that in the next version.

PAN M 360: According to you, who plays the cello? You or the robotic AI?

Woulg: For now, it’s kind of both I would say. To a certain extent, we’re going to have to be the interpreter of what the AI spits out.